ZK Huge Grouping Model"

Robertwenzel (talk | contribs) |

m |

||

| (52 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:Smalltalk_Author| | {{Template:Smalltalk_Author| | ||

|author=Robert Wenzel, Engineer, Potix Corporation | |author=Robert Wenzel, Engineer, Potix Corporation | ||

| − | |date= | + | |date=September 24, 2013 |

|version=ZK 6.5 (or later) | |version=ZK 6.5 (or later) | ||

}} | }} | ||

| − | |||

=Introduction= | =Introduction= | ||

| − | + | Sometimes you have a huge amount of data in your DB and want display it in a ZK Listbox or Grid using data grouping. | |

| + | Of course, you want to use paging because the list would be far too long to display in one page. Or, you want to avoid a timeout or "OutOfMemoryError" when reading / traversing large (or even unknown) number of records. | ||

| − | + | For a flat list, this topic has already been addressed in this Small Talk [http://books.zkoss.org/wiki/Small_Talks/2009/July/Handling_huge_data_using_ZK here]. | |

| − | http://books.zkoss.org/wiki/Small_Talks/2009/July/Handling_huge_data_using_ZK | ||

| − | + | Based on the concepts there (paging at DB level and separating the paging control from the grid) this article discusses a strategy and shows an exemplary implementation on how to do data grouping in a similar fashion. | |

| + | |||

| + | However things get more complicated there... so in the end I hope to provide an idea of how to hide the complex parts, and let the developer concentrate on retrieving and displaying the data. | ||

=The Challenges= | =The Challenges= | ||

Grouping is a powerful feature in ZK but also adds an extra layer of complexity. | Grouping is a powerful feature in ZK but also adds an extra layer of complexity. | ||

| + | |||

| + | One might argue whether it is useful to display such a long list in this way (especially without pre-filtering the results). | ||

| + | |||

| + | We are here to show that it is possible, maxing out the limits ... ZK GroupsModel supports indexes of int-datatype so we will go up to somewhere around Integer.MAX_VALUE (~ 2.000.000.000 records - 2 Billion) | ||

==Paging and grouping challenges== | ==Paging and grouping challenges== | ||

| − | 1. | + | 1. Groups can be open or closed (initially and interactively) |

| − | :-> | + | :-> The total count and the number of pages change, when opening/closing nodes... this needs efficient counting, and state keeping |

| + | |||

| + | 2. Groups can have arbitrary number of children | ||

| + | :-> Random access to a specific page can become tricky ... how to know the current group and position inside the group for that page | ||

| + | :--> How to implement a feasible search? | ||

| + | |||

| + | 3. Minimize DB operations (accumulating network/DB latency) | ||

| + | :-> Consider caching vs. memory consumption | ||

| − | 2. | + | 1. + 2. + 3. This article will discuss how to combine all three aspects in an efficient, memory preserving (almost predictable) way, and a few trade offs, that have to be made. Also some extension points are highlighted, where if possible the general purpose implementation can be replaced by a more specific one, knowing more details about your own data model. |

| − | |||

| − | |||

| − | + | ==Some Limitations== | |

| − | |||

| − | + | * The example only works on static data - when data changes, the model needs to be reinitialized and caches need to be cleared. | |

| + | * As not all rows are present in memory, you cannot send UI events to components currently not on the screen (you manipulate the model state instead) | ||

| + | * The example needs to store the open/close state in memory ("humans are limited", one is unlikely to toggle 100+ groups) - So should be no problem. | ||

| + | * Some "dummy" components are required to make the <grid> accept the limited (windowed) view over the data. | ||

| − | = | + | =Implementation= |

| − | + | The [[#Download|example package]] is a maven project, with the following structure: | |

| − | + | ||

| − | + | [[File:hugeGrouping_files.png]] | |

| − | + | ||

| + | The "src"-folder contains the following files/folders/packages: | ||

| + | |||

| + | Generic enabling classes (explained below): | ||

| + | ; org.zkoss.grouping.model | ||

| + | : generic paging GroupsModel implementation | ||

| + | ; org.zkoss.grouping.model.search | ||

| + | : grouping position search implementations | ||

| − | |||

| − | + | Example specific implementations (showing the usage of the above) | |

| + | ; org.zkoss.grouping.dao | ||

| + | : in memory Dao class simulating huge data | ||

| + | ; org.zkoss.grouping | ||

| + | : the actual Composer (MVC) / ViewModel (MVVM) and (Dao-backed) PagingGroupsModel implementations | ||

| − | + | Corresponding zul-files (in src/main/webapp): | |

| − | + | ; accesslog.zul | |

| + | : MVC usage of PagingGroupsModel | ||

| + | ; accesslog_mvvm.zul | ||

| + | : MVVM usage of PagingGroupsModel together with DelegatingSinglePageGroupsModel | ||

| + | ; accesslog_mvvm_default_paging.zul | ||

| + | : MVVM example highlighting the performance issues without separate paging control, using a much smaller data set | ||

==Accessing the data== | ==Accessing the data== | ||

| Line 70: | Line 97: | ||

</source> | </source> | ||

| − | The AccessDataDao is a mock implementation, generating the data on the fly not using a DB | + | The AccessDataDao is a mock implementation, generating the data on the fly not using a DB (just deterministic random values...) caching the group sizes in memory. |

Initially 40.000.000 of group's child counts stored in an int[] are still 160 MB of memory. | Initially 40.000.000 of group's child counts stored in an int[] are still 160 MB of memory. | ||

| − | So ignoring the random data generation, your Dao would need the following operations | + | So ignoring the random data generation, your Dao would need to implement the following 5 operations with according SQL/HQL/Criteria/noSQL queries. I am aware that even counting big amounts of Data at DB level is not a piece of cake. There is always some preparation of your data at DB level to be done: e.g. proper indexing, pre aggregated groups/counts (this is nothing the front end framework can support you with). |

<source lang="java"> | <source lang="java"> | ||

| Line 114: | Line 141: | ||

===The Paging Model=== | ===The Paging Model=== | ||

| − | AccessDataGroupsModel our specialized PagingGroupsModel<D, H, F> | + | AccessDataGroupsModel, our specialized PagingGroupsModel<D, H, F> uses AccessDataRecord as "data"(D) and String as "head"(H) and "foot"(F) implementation. |

This class implements the 4 model methods and a GroupingPositionSearch strategy - all delegating to AccessLogDao from above, to keep the workload at DB-Level. | This class implements the 4 model methods and a GroupingPositionSearch strategy - all delegating to AccessLogDao from above, to keep the workload at DB-Level. | ||

| Line 172: | Line 199: | ||

</source> | </source> | ||

| − | As we see the methods are focusing on counting, and retrieving data in page sized chunks. Nothing magical here, while our DB is doing the heavy work (selecting, grouping, counting, sorting) ... that's what it is optimized for. And with huge data we'll not attempt to compete with our DB, we just want as little data as possible. Your DB admin will be happy to assist you optimizing the queries. ;-) | + | As we can see the methods are focusing on counting, and retrieving data in page sized chunks. Nothing magical here, while our DB is doing the heavy work (selecting, grouping, counting, sorting) ... that's what it is optimized for. And with huge data we'll not attempt to compete with our DB, we just want as little data as possible. Your DB admin will be happy to assist you optimizing the queries and preparing the data. ;-) |

==Page/Caching, Counting & Open/Close State keeping== | ==Page/Caching, Counting & Open/Close State keeping== | ||

| Line 178: | Line 205: | ||

===Open/Close State keeping=== | ===Open/Close State keeping=== | ||

| − | The PagingGroupsModel will keep track of the open/close | + | The PagingGroupsModel will keep track of the open/close states for the toggled groups (together with the childCount of that group). |

If the Model is initialized with INITIALLY_OPEN, then only the closed nodes will be tracked, and vice versa. | If the Model is initialized with INITIALLY_OPEN, then only the closed nodes will be tracked, and vice versa. | ||

| Line 193: | Line 220: | ||

===Page/Caching=== | ===Page/Caching=== | ||

| − | The GroupsModel interface offers methods to retrieve single Groups, and Children. | + | The GroupsModel interface (ZK default) offers methods to retrieve '''single''' Groups, and Children. PagingGroupsModel implements this interface. |

| − | Whenever one of these methods is called the PagingGroupsModel will check if that position is cached for the current page, and | + | Whenever one of these methods is called the PagingGroupsModel will check if that position is cached for the current page, and if not (re)load a '''page sized chunk''' of group-/child-data from the DB and put into the respective cache. |

One problem here is, that the chunk retrieved from the DB will not match the page boundaries in the UI. | One problem here is, that the chunk retrieved from the DB will not match the page boundaries in the UI. | ||

| Line 210: | Line 237: | ||

'''Page size vs. Group size''': While the UI-Pages have a constant size the groups may have a variable size, and their offset may shift because of previously opened/closed nodes, the offset of the chunks loaded from the DB would vary, whenever nodes are toggled. | '''Page size vs. Group size''': While the UI-Pages have a constant size the groups may have a variable size, and their offset may shift because of previously opened/closed nodes, the offset of the chunks loaded from the DB would vary, whenever nodes are toggled. | ||

| − | As we want to benefit from caching at all possible levels, keeping the method/query parameters constant will reduce our required cache size at Dao method level or in DB and will avoid duplicate load of overlapping chunks (with multiple users having different UI | + | As we want to benefit from caching at all possible levels, keeping the method/query parameters constant will reduce our required cache size at Dao method level or in DB and will avoid duplicate load of overlapping chunks (with multiple users having different UI states). |

So in the scenario above whenever e.g. Element 3 of Group 1 needs to be loaded the PagingGroupsModel will load the chunk "Group1 Child0-9" calling this.loadChildrenPage(1, 0, 10). Perfect for caching, as it would never call e.g. this.loadChildrenPage(1, 3, 10). | So in the scenario above whenever e.g. Element 3 of Group 1 needs to be loaded the PagingGroupsModel will load the chunk "Group1 Child0-9" calling this.loadChildrenPage(1, 0, 10). Perfect for caching, as it would never call e.g. this.loadChildrenPage(1, 3, 10). | ||

| Line 229: | Line 256: | ||

The disadvantage of this approach is, that in most cases at least two chunks need to be loaded to display a single page, however when caching a few of these chunks in the model, you'll have one of the two already loaded for the previous page no matter which direction you navigate. | The disadvantage of this approach is, that in most cases at least two chunks need to be loaded to display a single page, however when caching a few of these chunks in the model, you'll have one of the two already loaded for the previous page no matter which direction you navigate. | ||

| − | I think the better cachability outrules the slight memory overhead. At a page size of 50, we might have 49 records too much in memory (which will likely be reused on the next page, when the user navigates, by one Page). But | + | I think the better cachability outrules the slight memory overhead. At a page size of 50, we might have 49 records too much in memory (which will likely be reused on the next page, when the user navigates, by one Page). But reducing the permutations of method/query parameters by 98% which highly improves the chance of a cache hit at method or DB level. I would even keep the cache bigger, so that back navigation by few pages does not cause a reload. |

Also very small groups will result in more DB round trips, but hey ... we are talking about BIG data :) | Also very small groups will result in more DB round trips, but hey ... we are talking about BIG data :) | ||

| Line 243: | Line 270: | ||

''Which group- / child-index does page X start with??'' | ''Which group- / child-index does page X start with??'' | ||

| − | + | The brute force way of just iterating over all the groups accumulating their size until the (page * pageSize) is reached is not feasible. | |

| − | It will either cause many DB operations, or one big traversal over a huge result set of Group counts (Keep in mind, we don't know how many groupCounts we need to accumulate until we reach | + | It will either cause many DB operations, or one big traversal over a huge result set of Group counts (Keep in mind, we don't know how many groupCounts we need to accumulate until we reach page X). |

I am not a DB expert, so maybe there is a solution for this problem already present at DB level (and we'd have to adjust the result by the number of closed nodes the DB does not know about) and I am not a scientist so there is maybe a more sophisticated perfectly balanced search algorithm - Please comment if you know :) | I am not a DB expert, so maybe there is a solution for this problem already present at DB level (and we'd have to adjust the result by the number of closed nodes the DB does not know about) and I am not a scientist so there is maybe a more sophisticated perfectly balanced search algorithm - Please comment if you know :) | ||

| Line 253: | Line 280: | ||

1. Bisect the number of groups into Ranges and have the DB count the rows for that range | 1. Bisect the number of groups into Ranges and have the DB count the rows for that range | ||

| − | : | + | : (I am sure the DB is faster than you in java code (assuming efficient indexing) ;)) e.g. |

: <code>SELECT count(*) WHERE group_key >= x AND group_key < y</code> | : <code>SELECT count(*) WHERE group_key >= x AND group_key < y</code> | ||

| Line 260: | Line 287: | ||

3. Define the next smaller range always halving the intervals. | 3. Define the next smaller range always halving the intervals. | ||

| − | Repeat 1-3 | + | Repeat 1-3 until you find the group. |

| − | * For 1024 groups you' | + | * For 1024 groups you won't have to repeat that more than 9 times until you find the group we are interested in. |

* For 1024 * 1024 (about a million) it is only 19 times | * For 1024 * 1024 (about a million) it is only 19 times | ||

* For 1024 * 1024 * 1024 (about a billion) it is only 29 times | * For 1024 * 1024 * 1024 (about a billion) it is only 29 times | ||

| Line 279: | Line 306: | ||

# 157 < 158 :D the page start must be in in group #157 | # 157 < 158 :D the page start must be in in group #157 | ||

| − | + | Another positive sideeffect: The most counting intensive queries will only have little variance in their query parameters, so they will be most likely in the cache already, and the memory consumption for one cache entry is very low... 3 integers (range starts and end as cache key, and another integer for the count) -> Caching a few hundred(or thousand) of those results does not weigh too much, in contrast to caching each group count in memory (that's up to the DB) | |

1st query is always the same | 1st query is always the same | ||

| Line 288: | Line 315: | ||

Still we have a penalty of several DB queries for a random access to a page, but after that the cache is hot, and single page navigation will take a similar path in the binary search, causing much fewer cache misses (except for some rare cases, where you cross the boundary of a bigger range, but still the penalty will never be as big as the first request). Additionally the ranges will be the same for concurrent users, offering the possibility to cache at a lower application level too. | Still we have a penalty of several DB queries for a random access to a page, but after that the cache is hot, and single page navigation will take a similar path in the binary search, causing much fewer cache misses (except for some rare cases, where you cross the boundary of a bigger range, but still the penalty will never be as big as the first request). Additionally the ranges will be the same for concurrent users, offering the possibility to cache at a lower application level too. | ||

| − | Since the user will most likely begin | + | Since the user will most likely begin from the first page, I added an alternative approach starting from group zero, like a bottom-up search, doubling the ranges in each iteration until the searchIndex is exceeded and then refine the result reusing the binary approach. |

This postpones the expensive counting operations to a time when a "random access occurs"... as I said I am not a Scientist, so I just defined a threshold for the searchIndex. For bigger search indexes the top-to-bottom search is used. | This postpones the expensive counting operations to a time when a "random access occurs"... as I said I am not a Scientist, so I just defined a threshold for the searchIndex. For bigger search indexes the top-to-bottom search is used. | ||

| Line 304: | Line 331: | ||

=Using the Model in the UI= | =Using the Model in the UI= | ||

| − | We have seen how to access the data, and how the caching will hopefully have some positive effects. | + | We have seen how to access the data, and how the caching will hopefully have some positive effects. So all that remaining is the ViewModel, and the View. |

==accesslog_mvvm.zul== | ==accesslog_mvvm.zul== | ||

| Line 354: | Line 381: | ||

</source> | </source> | ||

| − | + | As you can see this is just a pretty basic Grouping Grid not using the "paging"-mold, but a separate paging control, as we only send a delegating Model (model="@load(vm.currentPageModel)") to the <grid> component. This is to only present as little information to the grid component as possible to avoid the component grid from caching data internally, and to gain full control over what is loaded. | |

| − | Because the Grid component | + | Because the Grid component expect groups to open and close in a consistent way, a dummy head and/or foot needs to be added if a Page does not start with a group head, or end with a foot. (this is slightly unpleasant, but what do we get in return) ... the biggest ZK grouping grid ever. |

==AccessDataGroupingViewModel== | ==AccessDataGroupingViewModel== | ||

| Line 420: | Line 447: | ||

</source> | </source> | ||

| − | + | Besides the currently somewhat dodgy call to groupsModel.getSinglePageModel() to get the fake group models there is nothing remarkable here either | |

* initialize the model | * initialize the model | ||

* handle paging events (updateOffsetIndex(x) in the delegating page model) | * handle paging events (updateOffsetIndex(x) in the delegating page model) | ||

| Line 426: | Line 453: | ||

The rest is done in PagingGroupsModel. | The rest is done in PagingGroupsModel. | ||

| − | + | =Running the demo application= | |

| + | |||

| + | ==Using Maven== | ||

| + | |||

| + | Unzip the Package and execute the mvn command: | ||

mvn jetty:run | mvn jetty:run | ||

| − | |||

| − | The application will be very responsive, whereever you navigate, | + | open this URL in browser: |

| + | : http://localhost:8080/hugeGrouping/accesslog_mvvm.zul | ||

| + | |||

| + | The application will be very responsive, whereever you navigate, open/ close groups. Looking at the console log, you'll get some information about Cache hits and Cache MISSES, notice the high number of cache hits after randomly navigating to a high page, and then visiting the next/previous page. | ||

The initial setup of the AccessLogDao (mock) is 40 million groups with 8 to 88 children randomly distributed, so we roughly end up with 2 BILLION rows. | The initial setup of the AccessLogDao (mock) is 40 million groups with 8 to 88 children randomly distributed, so we roughly end up with 2 BILLION rows. | ||

| Line 442: | Line 475: | ||

* loading 1 or 2 (or several very small) group children chunks (each of page size) | * loading 1 or 2 (or several very small) group children chunks (each of page size) | ||

* loading 0 or 1 new chunk(s) (of page size) of group information (without the children payload) | * loading 0 or 1 new chunk(s) (of page size) of group information (without the children payload) | ||

| − | * usually a small number of requests that earn Cache MISSES during the binary | + | * usually a small number of requests that earn Cache MISSES during the binary searches for the next page's startIndex. |

** This could be improved with a little more decisive paging event handler being aware of single page increments / decrements. To keep the sample simple we treat every position search as a random access, and still have good performance without special case handling. | ** This could be improved with a little more decisive paging event handler being aware of single page increments / decrements. To keep the sample simple we treat every position search as a random access, and still have good performance without special case handling. | ||

| + | |||

| + | ==War File== | ||

| + | |||

| + | If you cannot (don't want to) use maven there is also a war-file available for download. It can be deployed into an existing web app server (e.g. [[http://tomcat.apache.org/download-70.cgi Tomcat]]) | ||

=Appendix= | =Appendix= | ||

==Download== | ==Download== | ||

| − | [http://sourceforge.net/projects/zkforge/files/Small%20Talks/ | + | |

| + | download maven project [http://sourceforge.net/projects/zkforge/files/Small%20Talks/Huge%20Data%20Grouping/hugeGrouping.zip/download hugeGrouping.zip (~24.7 kB)] | ||

| + | |||

| + | download as war-file [http://sourceforge.net/projects/zkforge/files/Small%20Talks/Huge%20Data%20Grouping/hugeGrouping.war/download hugeGrouping.war (~25 MB)] | ||

=Comments= | =Comments= | ||

Latest revision as of 09:12, 24 September 2013

Robert Wenzel, Engineer, Potix Corporation

September 24, 2013

ZK 6.5 (or later)

Introduction

Sometimes you have a huge amount of data in your DB and want display it in a ZK Listbox or Grid using data grouping. Of course, you want to use paging because the list would be far too long to display in one page. Or, you want to avoid a timeout or "OutOfMemoryError" when reading / traversing large (or even unknown) number of records.

For a flat list, this topic has already been addressed in this Small Talk here.

Based on the concepts there (paging at DB level and separating the paging control from the grid) this article discusses a strategy and shows an exemplary implementation on how to do data grouping in a similar fashion.

However things get more complicated there... so in the end I hope to provide an idea of how to hide the complex parts, and let the developer concentrate on retrieving and displaying the data.

The Challenges

Grouping is a powerful feature in ZK but also adds an extra layer of complexity.

One might argue whether it is useful to display such a long list in this way (especially without pre-filtering the results).

We are here to show that it is possible, maxing out the limits ... ZK GroupsModel supports indexes of int-datatype so we will go up to somewhere around Integer.MAX_VALUE (~ 2.000.000.000 records - 2 Billion)

Paging and grouping challenges

1. Groups can be open or closed (initially and interactively)

- -> The total count and the number of pages change, when opening/closing nodes... this needs efficient counting, and state keeping

2. Groups can have arbitrary number of children

- -> Random access to a specific page can become tricky ... how to know the current group and position inside the group for that page

- --> How to implement a feasible search?

3. Minimize DB operations (accumulating network/DB latency)

- -> Consider caching vs. memory consumption

1. + 2. + 3. This article will discuss how to combine all three aspects in an efficient, memory preserving (almost predictable) way, and a few trade offs, that have to be made. Also some extension points are highlighted, where if possible the general purpose implementation can be replaced by a more specific one, knowing more details about your own data model.

Some Limitations

- The example only works on static data - when data changes, the model needs to be reinitialized and caches need to be cleared.

- As not all rows are present in memory, you cannot send UI events to components currently not on the screen (you manipulate the model state instead)

- The example needs to store the open/close state in memory ("humans are limited", one is unlikely to toggle 100+ groups) - So should be no problem.

- Some "dummy" components are required to make the <grid> accept the limited (windowed) view over the data.

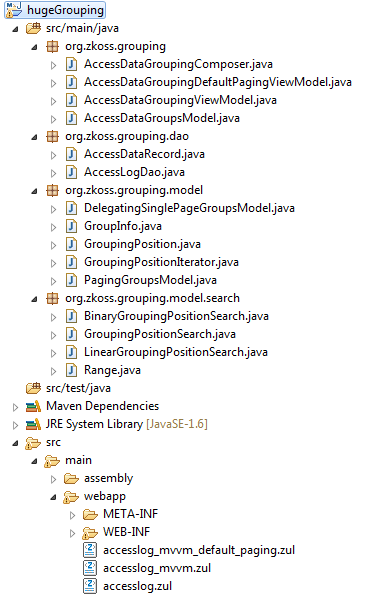

Implementation

The example package is a maven project, with the following structure:

The "src"-folder contains the following files/folders/packages:

Generic enabling classes (explained below):

- org.zkoss.grouping.model

- generic paging GroupsModel implementation

- org.zkoss.grouping.model.search

- grouping position search implementations

Example specific implementations (showing the usage of the above)

- org.zkoss.grouping.dao

- in memory Dao class simulating huge data

- org.zkoss.grouping

- the actual Composer (MVC) / ViewModel (MVVM) and (Dao-backed) PagingGroupsModel implementations

Corresponding zul-files (in src/main/webapp):

- accesslog.zul

- MVC usage of PagingGroupsModel

- accesslog_mvvm.zul

- MVVM usage of PagingGroupsModel together with DelegatingSinglePageGroupsModel

- accesslog_mvvm_default_paging.zul

- MVVM example highlighting the performance issues without separate paging control, using a much smaller data set

Accessing the data

Data Record and Dao

We start with a simple data record representing access log data.

package org.zkoss.grouping.dao;

import java.util.Date;

public class AccessDataRecord {

private String ipAddress;

private String browser;

private long contentLength;

private String country;

private Date accessTime;

private String url;

//... Constructor + getters

The AccessDataDao is a mock implementation, generating the data on the fly not using a DB (just deterministic random values...) caching the group sizes in memory. Initially 40.000.000 of group's child counts stored in an int[] are still 160 MB of memory.

So ignoring the random data generation, your Dao would need to implement the following 5 operations with according SQL/HQL/Criteria/noSQL queries. I am aware that even counting big amounts of Data at DB level is not a piece of cake. There is always some preparation of your data at DB level to be done: e.g. proper indexing, pre aggregated groups/counts (this is nothing the front end framework can support you with).

package org.zkoss.grouping.dao;

public class AccessLogDao {

public int getGroupCount() {

//your DB query to count the groups

}

public List<GroupInfo<String, String>> getGroupInfos(int startIndex, int pageSize) {

//your DB query and mapping to create GroupInfos for this page of groups

}

public List<AccessDataRecord> getChildInfos(int groupIndex, int startIndex, int pageSize) {

//your DB query and load this page of children in the group

}

/**

* This method will count a lot and return a constant value as long as data does not change,

* so caching would be desirable

* @return

*/

public int getTotalChildCount() {

//your DB impl to calc the total number of children

}

/**

* This method will be called quite often with recurring parameters,

* so caching (of most frequently used params) would be desirable !!!

* @return the childrenCount between 2 groups... (including groupIndexFrom and excluding groupIndexTo)

*/

public int getChildCountBetween(int groupIndexFrom, int groupIndexTo) {

//your DB impl to calc the number of children between 2 groups

}

The Paging Model

AccessDataGroupsModel, our specialized PagingGroupsModel<D, H, F> uses AccessDataRecord as "data"(D) and String as "head"(H) and "foot"(F) implementation. This class implements the 4 model methods and a GroupingPositionSearch strategy - all delegating to AccessLogDao from above, to keep the workload at DB-Level.

package org.zkoss.grouping;

import java.util.List;

import org.zkoss.grouping.dao.AccessLogDao;

import org.zkoss.grouping.dao.AccessDataRecord;

import org.zkoss.grouping.model.GroupInfo;

import org.zkoss.grouping.model.PagingGroupsModel;

import org.zkoss.grouping.model.search.BinaryGroupingPositionSearch;

import org.zkoss.grouping.model.search.GroupingPositionSearch;

class AccessDataGroupsModel extends

PagingGroupsModel<AccessDataRecord, String, String> {

private AccessLogDao groupsDao;

public AccessDataGroupsModel(AccessLogDao groupsDao, int pageSize, boolean initialOpen, boolean hasGroupfoot) {

super(pageSize, initialOpen, hasGroupfoot);

this.groupsDao = groupsDao;

setPositionSearch(binarySearch());

}

@Override

protected int loadGroupCount() {

return groupsDao.getGroupCount();

}

@Override

protected List<GroupInfo<String, String>> loadGroupPage(int startIndex, int pageSize) {

return groupsDao.getGroupInfos(startIndex, pageSize);

}

@Override

protected List<AccessDataRecord> loadChildrenPage(int groupIndex, int startIndex, int pageSize) {

return groupsDao.getChildInfos(groupIndex, startIndex, pageSize);

}

@Override

protected int getTotalChildCount() {

return groupsDao.getTotalChildCount();

}

private GroupingPositionSearch binarySearch() {

return new BinaryGroupingPositionSearch(this, 4096) {

@Override

protected int getChildCountBetween(int groupIndexFrom, int groupIndexTo) {

return groupsDao.getChildCountBetween(groupIndexFrom, groupIndexTo);

}

};

}

}

As we can see the methods are focusing on counting, and retrieving data in page sized chunks. Nothing magical here, while our DB is doing the heavy work (selecting, grouping, counting, sorting) ... that's what it is optimized for. And with huge data we'll not attempt to compete with our DB, we just want as little data as possible. Your DB admin will be happy to assist you optimizing the queries and preparing the data. ;-)

Page/Caching, Counting & Open/Close State keeping

Open/Close State keeping

The PagingGroupsModel will keep track of the open/close states for the toggled groups (together with the childCount of that group).

If the Model is initialized with INITIALLY_OPEN, then only the closed nodes will be tracked, and vice versa.

Assuming a human will not take the time to toggle an outrageous number of groups, this Map (groupIndex => childcount) will stay relatively small and should not compromise our performance and memory expectations. Additionally the total number of toggled groups is updated on every open/close interaction.

This information is used to adjust the number of total children (for the UI paging calculations) or the number of children between 2 groups (for Position search) - as the DB won't keep that UI state.

see:

- PagingGroupsModel#getCurrentRowCount()

- PagingGroupsModel#getToggledCountBetween(int groupIndexFrom, int groupIndexTo)

- PagingGroupsModel#toggleGroup(int groupIndex, boolean open)

Page/Caching

The GroupsModel interface (ZK default) offers methods to retrieve single Groups, and Children. PagingGroupsModel implements this interface. Whenever one of these methods is called the PagingGroupsModel will check if that position is cached for the current page, and if not (re)load a page sized chunk of group-/child-data from the DB and put into the respective cache.

One problem here is, that the chunk retrieved from the DB will not match the page boundaries in the UI.

e.g.

1 2 3 4

|.........|.........|.........|......... -> UI pages (page size 10)

0.....1.........|......2...3......4....5 -> Groups | Chunks Group 0 open

01.........|......2...3......4....5..... -> Groups | Chunks Group 0 closedPage size vs. Group size: While the UI-Pages have a constant size the groups may have a variable size, and their offset may shift because of previously opened/closed nodes, the offset of the chunks loaded from the DB would vary, whenever nodes are toggled.

As we want to benefit from caching at all possible levels, keeping the method/query parameters constant will reduce our required cache size at Dao method level or in DB and will avoid duplicate load of overlapping chunks (with multiple users having different UI states).

So in the scenario above whenever e.g. Element 3 of Group 1 needs to be loaded the PagingGroupsModel will load the chunk "Group1 Child0-9" calling this.loadChildrenPage(1, 0, 10). Perfect for caching, as it would never call e.g. this.loadChildrenPage(1, 3, 10).

see:

- AccessDataGroupsModel#loadGroupPage(int startIndex, int pageSize)

- AccessDataGroupsModel#loadChildrenPage(int groupIndex, int startIndex, int pageSize)

The same strategy applies to caching the Groups. PagingGroupsModel will also load chunks of Groups with fixed intervals. In case groups are displayed with a foot, the groups cache size can be halved, as the group foot will consume one row too.

cachedGroups = Collections.synchronizedMap(new LRUMap(pageSize * (hasGroupFoot ? 1 : 2))); //cache at least 2 pages ...

cachedChildren = Collections.synchronizedMap(new LRUMap(pageSize * 4)); //cache enough for at least a few pagesThe cache size is reasonably small and the LRUMap will make sure it will not grow beyond its capacity throwing out the oldest Groups/Children, when overflowing.

The disadvantage of this approach is, that in most cases at least two chunks need to be loaded to display a single page, however when caching a few of these chunks in the model, you'll have one of the two already loaded for the previous page no matter which direction you navigate.

I think the better cachability outrules the slight memory overhead. At a page size of 50, we might have 49 records too much in memory (which will likely be reused on the next page, when the user navigates, by one Page). But reducing the permutations of method/query parameters by 98% which highly improves the chance of a cache hit at method or DB level. I would even keep the cache bigger, so that back navigation by few pages does not cause a reload.

Also very small groups will result in more DB round trips, but hey ... we are talking about BIG data :)

Random Access Paging

When just navigating page by page, this would already be sufficient... but what if you want to navigate to page no 234.455.234 :-o

This is no problem when Groups are closed by default, as each group will only consist of a Head (or Head and Foot).

If pages are open by default you suddenly encounter a question you might not even have thought about before, when doing pagination in a flat list without the grouping level - plus some groups open/closed.

Which group- / child-index does page X start with??

The brute force way of just iterating over all the groups accumulating their size until the (page * pageSize) is reached is not feasible. It will either cause many DB operations, or one big traversal over a huge result set of Group counts (Keep in mind, we don't know how many groupCounts we need to accumulate until we reach page X).

I am not a DB expert, so maybe there is a solution for this problem already present at DB level (and we'd have to adjust the result by the number of closed nodes the DB does not know about) and I am not a scientist so there is maybe a more sophisticated perfectly balanced search algorithm - Please comment if you know :)

So here is my approach which will keep the required DB operations at a low level, and again provide high chance for cache hits, especially for the most expensive often recurring count operations.

A binary search:

1. Bisect the number of groups into Ranges and have the DB count the rows for that range

- (I am sure the DB is faster than you in java code (assuming efficient indexing) ;)) e.g.

SELECT count(*) WHERE group_key >= x AND group_key < y

2. Check whether the result (adjusted by group-heads(, -feet) and the toggled groups) is bigger or smaller.

3. Define the next smaller range always halving the intervals.

Repeat 1-3 until you find the group.

- For 1024 groups you won't have to repeat that more than 9 times until you find the group we are interested in.

- For 1024 * 1024 (about a million) it is only 19 times

- For 1024 * 1024 * 1024 (about a billion) it is only 29 times

So you see this scales well 2x the data, requires only 1 additional DB round trip.

When starting with 1024 Groups a possible sequence of ranges might be

- 0 < 512 (WHERE group_key >= 0 AND group_key < 512)

- 0 < 256 ...

- 128 < 192

- 128 < 160

- 144 < 160

- 152 < 160

- 156 < 160

- 156 < 158

- 157 < 158 :D the page start must be in in group #157

Another positive sideeffect: The most counting intensive queries will only have little variance in their query parameters, so they will be most likely in the cache already, and the memory consumption for one cache entry is very low... 3 integers (range starts and end as cache key, and another integer for the count) -> Caching a few hundred(or thousand) of those results does not weigh too much, in contrast to caching each group count in memory (that's up to the DB)

1st query is always the same 2nd query has only 2 options 3rd query has 4 options ...

Still we have a penalty of several DB queries for a random access to a page, but after that the cache is hot, and single page navigation will take a similar path in the binary search, causing much fewer cache misses (except for some rare cases, where you cross the boundary of a bigger range, but still the penalty will never be as big as the first request). Additionally the ranges will be the same for concurrent users, offering the possibility to cache at a lower application level too.

Since the user will most likely begin from the first page, I added an alternative approach starting from group zero, like a bottom-up search, doubling the ranges in each iteration until the searchIndex is exceeded and then refine the result reusing the binary approach. This postpones the expensive counting operations to a time when a "random access occurs"... as I said I am not a Scientist, so I just defined a threshold for the searchIndex. For bigger search indexes the top-to-bottom search is used.

For the case someone has a better strategy, I extracted the GroupingPositionSearch interface for everyone to implement themselves (maybe a pure DB stored procedure).

See:

- org.zkoss.grouping.model.search.GroupingPositionSearch

- org.zkoss.grouping.model.search.LinearGroupingPositionSearch

- an implementation for fun... to compare the performance difference

- org.zkoss.grouping.model.search.BinaryGroupingPositionSearch

- all one needs is to implement the method getChildCountBetween() and query the DB

Enough pseudo theoretical talk ... let's use this!

Using the Model in the UI

We have seen how to access the data, and how the caching will hopefully have some positive effects. So all that remaining is the ViewModel, and the View.

accesslog_mvvm.zul

<window border="normal" height="810px" width="1200px"

apply="org.zkoss.bind.BindComposer" viewModel="@id('vm')

@init('org.zkoss.grouping.AccessDataGroupingViewModel')">

<paging pageSize="@load(vm.pageSize)"

activePage="@bind(vm.activePage)"

totalSize="@load(vm.totalSize)"

onPaging="@command('changePage', activePage=event.activePage)"

detailed="true" />

<grid model="@load(vm.currentPageModel)" >

<columns>

<column label="ip" ></column>

<column label="url" ></column>

<column label="time" ></column>

<column label="country" ></column>

<column label="browser" ></column>

<column label="length" ></column>

</columns>

<template name="model:group">

<group label="@init(each)" visible="@init(not(vm.isDummy(each)))" ></group>

</template>

<template name="model">

<row>

<label value="@init(each.ipAddress)"></label>

<label value="@init(each.url)"></label>

<label value="@init(each.accessTime)"></label>

<label value="@init(each.country)"></label>

<label value="@init(each.browser)"></label>

<label value="@init(each.contentLength)"></label>

</row>

</template>

<template name="model:groupfoot">

<groupfoot visible="@init(not(vm.isDummy(each)))">

<cell colspan="6" sclass="z-groupfoot-inner">

<label value="@init(each)" sclass="z-groupfoot-cnt" />

</cell>

</groupfoot>

</template>

</grid>

</window>As you can see this is just a pretty basic Grouping Grid not using the "paging"-mold, but a separate paging control, as we only send a delegating Model (model="@load(vm.currentPageModel)") to the <grid> component. This is to only present as little information to the grid component as possible to avoid the component grid from caching data internally, and to gain full control over what is loaded.

Because the Grid component expect groups to open and close in a consistent way, a dummy head and/or foot needs to be added if a Page does not start with a group head, or end with a foot. (this is slightly unpleasant, but what do we get in return) ... the biggest ZK grouping grid ever.

AccessDataGroupingViewModel

public class AccessDataGroupingViewModel {

private static final String DUMMY_FOOT = new String();

private static final String DUMMY_HEAD = new String();

//TODO: inject

private AccessLogDao groupsDao = AccessLogDao.getInstance();

private PagingGroupsModel<AccessDataRecord, String, String> groupsModel;

private int pageSize;

private int activePage;

private DelegatingSinglePageGroupsModel<AccessDataRecord, String, String> currentPageModel;

@Init

public void init() {

pageSize = 20;

activePage = 0;

groupsModel = new AccessDataGroupsModel(groupsDao, pageSize,

PagingGroupsModel.INITIALLY_OPEN, PagingGroupsModel.GROUPFOOT_ON);

currentPageModel = groupsModel.getSinglePageModel(DUMMY_HEAD, DUMMY_FOOT, this, "totalSize");

refreshRows();

}

@Command("changePage")

@NotifyChange("currentPageModel")

public void onChangePage() {

refreshRows();

}

private void refreshRows() {

currentPageModel.updateOffsetIndex(activePage * pageSize);

}

public int getPageSize() {

return pageSize;

}

public int getActivePage() {

return activePage;

}

public void setActivePage(int activePage) {

this.activePage = activePage;

}

public int getTotalSize() {

return groupsModel.getCurrentRowCount();

}

public GroupsModel<AccessDataRecord, String, String> getCurrentPageModel() {

return currentPageModel;

}

public boolean isDummy(String string) {

return DUMMY_HEAD == string || DUMMY_FOOT == string ;

}

}Besides the currently somewhat dodgy call to groupsModel.getSinglePageModel() to get the fake group models there is nothing remarkable here either

- initialize the model

- handle paging events (updateOffsetIndex(x) in the delegating page model)

The rest is done in PagingGroupsModel.

Running the demo application

Using Maven

Unzip the Package and execute the mvn command:

mvn jetty:run

open this URL in browser:

The application will be very responsive, whereever you navigate, open/ close groups. Looking at the console log, you'll get some information about Cache hits and Cache MISSES, notice the high number of cache hits after randomly navigating to a high page, and then visiting the next/previous page.

The initial setup of the AccessLogDao (mock) is 40 million groups with 8 to 88 children randomly distributed, so we roughly end up with 2 BILLION rows.

The memory consumption will remain stable once the Dao is initialized (these 160MB - mentioned above - would usually be on the DB and not in memory) when navigating the list. Once the cache of the binary grouping position search is hot, the number of DB calls is low (usually around 1-4 less frequently bigger) per page increment / decrement, after randomly accessing any arbitrary page (despite some special cases - traversing the bounds of a big binary search region).

And the data returned from the DB is very little:

- loading 1 or 2 (or several very small) group children chunks (each of page size)

- loading 0 or 1 new chunk(s) (of page size) of group information (without the children payload)

- usually a small number of requests that earn Cache MISSES during the binary searches for the next page's startIndex.

- This could be improved with a little more decisive paging event handler being aware of single page increments / decrements. To keep the sample simple we treat every position search as a random access, and still have good performance without special case handling.

War File

If you cannot (don't want to) use maven there is also a war-file available for download. It can be deployed into an existing web app server (e.g. [Tomcat])

Appendix

Download

download maven project hugeGrouping.zip (~24.7 kB)

download as war-file hugeGrouping.war (~25 MB)

Comments

| Copyright © Potix Corporation. This article is licensed under GNU Free Documentation License. |